FinQA DeepDive

Q&A system for financial reports and analyst reviews

Xianghui Xin

2023-37252

Julian Felix Kieslinger

2025-82736

Jiayue Wang

2022-21806

Team: 😈🔥

Today:

https://finqa-hallumaker-deepdive.pages.dev

Presentation Outline

- Team Summary

- Strategy

- Initial Results

- Question Categorization

- Fiscal Year Handling Optimization

- Tool extension

- Difficulties Encountered

- Future Work

Thanks to Team Paris Baguette, we took their presentation structure for reference.

Team Summary

| Member | Role | Done | To Do |

|---|---|---|---|

| Xianghui Xin | Project Lead | System architecture, Dynamic Question categorization, Difficulty-based strategy | MCP integration Optimization, React Agent Optimization |

| Julian Kieslinger | Financial Expert | Model evaluation, Static Question categorization | Financial tools, Document embedding |

| Jiayue Wang | Data Specialist | Fiscal Year Handling Optimization (retrieval scope extension, temporal alignment), tool extension | Database management, Vector DB optimization |

Collaboration Strategy

- Weekly team meetings to discuss progress

- Task assignment based on expertise

- Regular code reviews and pair programming

Strategy

flowchart LR %% Main flow User([User Query]) --> Client["MCP Client (MultiServerMCPClient)"] %% Client to Router communication Client -->Router["Question Router (Strategy Selector)"] %% Router to Strategy selection Router -->|"Applies strategy based on level"| ReAct["ReAct Agent (LangGraph)"] %% Server section with detailed communication subgraph Servers["MCP Servers (Protocol-based Tool Providers)"] direction LR ReAct -->|"Tool calls"| Math["Math Server (add, multiply, divide)"] Math -->|"Calculation results"| ReAct ReAct -->|"Tool calls"| Finance["Finance Server (EPS, profit margins)"] Finance -->|"Financial metrics"| ReAct ReAct -->|"Search queries"| Chroma["Chroma Server (document retrieval)"] Chroma -->|"Relevant text chunks"| ReAct ReAct -->|"SQL queries"| SQLite["SQLite Server (company data)"] SQLite -->|"Structured data"| ReAct end %% Database connections with data flow details subgraph Databases["Data Sources"] direction LR Chroma -->|"Vector search"| ChromaDB[(Vector DB Financial reports)] ChromaDB -->|"Matching documents"| Chroma SQLite -->|"SQL queries"| CompanyDB[(Company DB Corporate data)] CompanyDB -->|"Query results"| SQLite end %% Final answer flow ReAct -->|"Generated answer"| Answer([Final Answer]) %% Styling with rounded corners classDef userNode fill:#ffebee,stroke:#c62828,color:#c62828,stroke-width:2px,rx:20,ry:20; classDef clientNode fill:#e8eaf6,stroke:#3f51b5,color:#3f51b5,stroke-width:2px,rx:10,ry:10; classDef reactNode fill:#b2dfdb,stroke:#00796b,color:#00796b,stroke-width:2px,rx:10,ry:10; classDef serverNode fill:#f5f5f5,stroke:#424242,color:#424242,stroke-width:2px,rx:5,ry:5; classDef dbNode fill:#ede7f6,stroke:#4527a0,color:#4527a0,stroke-width:2px,rx:0,ry:0; classDef answerNode fill:#e8f5e9,stroke:#2e7d32,color:#2e7d32,stroke-width:2px,rx:20,ry:20; classDef subgraphStyle fill:#fafafa,stroke:#9e9e9e,stroke-width:1px,rx:10,ry:10,color:#424242; %% Apply styles class User userNode; class Client clientNode; class ReAct reactNode; class Math,Finance,Chroma,SQLite serverNode; class ChromaDB,CompanyDB dbNode; class Answer answerNode; class Servers,Databases subgraphStyle;

Initial Results

Baseline Performance

$ python score.py

Overall Accuracy: 0.2800 (14/50)

...Our initial execution resulted an accuracy of 28%.

Error Analysis

- Hallucination - LLM generating incorrect financial data

- Tool Selection - Choosing wrong tools for specific tasks

- Temporal Confusion - Mixing data from different fiscal years

- Complex Calculations - Errors in multi-step financial formulas

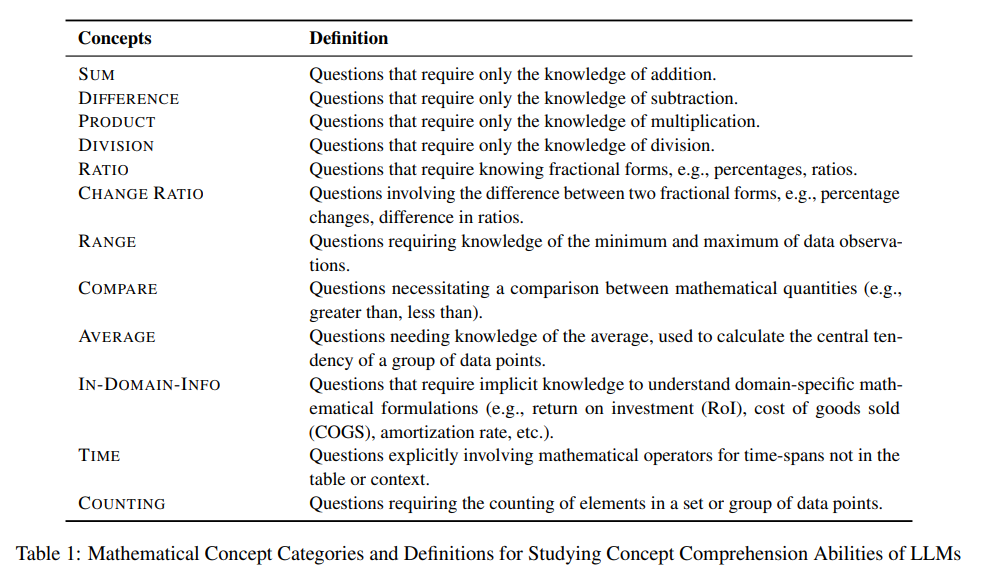

Question Categorization

flowchart LR

A[Incoming Question] --> B[LLM Question Analyzer]

B --> C{Difficulty Assessment}

C -->|"Simple fact retrieval

(single data point lookup)"|L1[Level 1]

C -->|"Simple calculations

on single document"|L2[Level 2]

C -->|"Multi-step calculations

or temporal reasoning"|L3[Level 3]

C -->|"Calculations involving

multiple documents/companies"|L4[Level 4]

C -->|"Complex reasoning with

multiple factors/filtering"|L5[Level 5]

%% Processing strategies with details

L1 --> P1[Simple RAG Strategy]

L2 --> P1

L3 --> P2[Tool-First Strategy]

L4 --> P2

L5 --> P3[Agentic RAG Strategy]

P1 -->|"Direct retrieval +

concise numerical answer"|Result1[Answer]

P2 -->|"Extract metrics → Temporal alignment → Retrieve data →

Calculate "|Result2[Answer]

P3 -->|"Entity identification → Data gathering →

Calculation → Comparison"|Result3[Answer]

%% Styling

classDef level1 fill:#e0f7fa,stroke:#0288d1;

classDef level2 fill:#e8f5e9,stroke:#2e7d32;

classDef level3 fill:#fff3e0,stroke:#fb8c00;

classDef level4 fill:#ede7f6,stroke:#5e35b1;

classDef level5 fill:#ffebee,stroke:#d32f2f;

classDef process fill:#f5f5f5,stroke:#333,stroke-dasharray:4 2;

classDef llm fill:#f8bbd0,stroke:#880e4f;

class L1 level1

class L2 level2

class L3 level3

class L4 level4

class L5 level5

class P1,P2,P3 process

class B,C llm

Question Categorization Results

Improved Performance

Overall Accuracy: 0.3000 (15/50)

Question Distribution:

Level 1: 16 questions - Accuracy: 0.6250 (10/16)

Level 2: 8 questions - Accuracy: 0.2500 (2/8)

Level 3: 2 questions - Accuracy: 0.0000 (0/2)

Level 4: 12 questions - Accuracy: 0.2500 (3/12)

Level 5: 12 questions - Accuracy: 0.0000 (0/12)

Level and Correctness Summary:

levels: 12111 11111 42421 42324 23112

21111 55455 55444 55555 44454

answer: oxoox oooxo xooxx xoxxo xxoox

xxoxx xxxxx xxxox xxxxx xxxxx

Number of Quesiton hitting recursion limit: 26Comparison with Baseline

Simple RAG Strategy Only (2% lower)

Overall Accuracy: 0.2800 (14/50)

Question distribution by level:

LEVEL_1: 14 questions (28.0%)

LEVEL_2: 9 questions (18.0%)

LEVEL_3: 1 questions (2.0%)

LEVEL_4: 15 questions (30.0%)

LEVEL_5: 11 questions (22.0%)

Level and Correctness Summary:

levels: 12111 11111 42421 42434 24212

22111 54545 55444 55555 44454

answer: oxoox oooxo xxxxx xoxxo oxoox

xxoxx xxxxx xxoxx xxxxx xxxxxLevel Distributions (Second Run)

Fiscal Year Handling Optimization

Retrieval scope extension

- Retrieve documents within five years after the given fiscal year.

- accuracy: ~0.32

Temporal Alignment

- Normalize relative time expression to absolute years based on the query context.

- LLM-based: using prompts to extract relative time and convert to absolute time.

- Rule-based: Identify and replace common relative year expression (this year, previous year, …).

- accuracy: ~0.38

prompt = f"""

You are a financial analyst assistant. The user will ask a question about a company using relative time like 'a year before', 'prior year', or 'six years ago'. Replace all relative year expressions with absolute years. If the current fiscal year is not provided, then use 2025.

Return the rewritten question.

Question: {question}

Return JSON like: { "question": "..."}

"""Tool extension

Inspired by previous work, we extend the math and fin tools:

Math tools

- difference

- average

- sum_values

- convert_thousands_to_number

Fin tools

- calculate_current_ratio

Difficulties Encountered

Technical Challenges

- Recursion Limit - Easy to hit the 25-step recursion limit

- Categorization Strategy - Evaluating problem difficulty on the fly vs. beforehand

- Complex Query Performance - Poor results for Level 3-5 questions

- Tool Selection Logic - Deciding which server to use

- Stability of the structure - significant accuracy variation

(accuracy of running experiments 5 times: 0.34, 0.32, 0.38, 0.38, 0.30) - Lack of financial knowledge

Future Work

Planned Improvements

- Recursion Optimization - Reduce steps needed for complex queries

- Question Categorization - Improve category detection accuracy

- Performance for Level 3-5 - Focus on complex reasoning

- Multi-document Analysis - Better strategies for data spanning documents

Research Directions

- Exploring better RAG techniques for financial data

- Implementing a chain-of-thought workflow for complex calculations

- Building a benchmark for financial QA evaluation

Thank You

Questions?

This presentation was created with reveal.js, an HTML presentation framework.